New data warehouse, new problems. Whether you spun up Snowflake last week or you’ve been using it before their S1, the potential for data is shifting and expanding faster than you can type a WHERE clause.

And here you are, trying to figure out how to get your data from A to CSV to B. We’re sure the reasons you’re doing so are pure, but there are generally three motivations folks have for exporting their CSV files from Snowflake:

- To import it into other tools as CSV is one of the most common data formats across different tools and platforms.

- To move data across cloud platforms as CSV files can be loaded into your cloud-based relational databases or warehouses.

- To visualize data for analysis and reporting to work with network policy, security, or infrastructure limitations.

Snowflake already provides convenient features for data analysis, but as Adrian Palacios says, “CSV files are the API of the marketing org” because they work with everything.

You might ultimately ask: How can I get my data into Pipedrive? Or maybe an exec wants to pivot the data with trusty old Excel. Either way, we’ve got a few options for you to work with.

Three ways to export CSV data from Snowflake

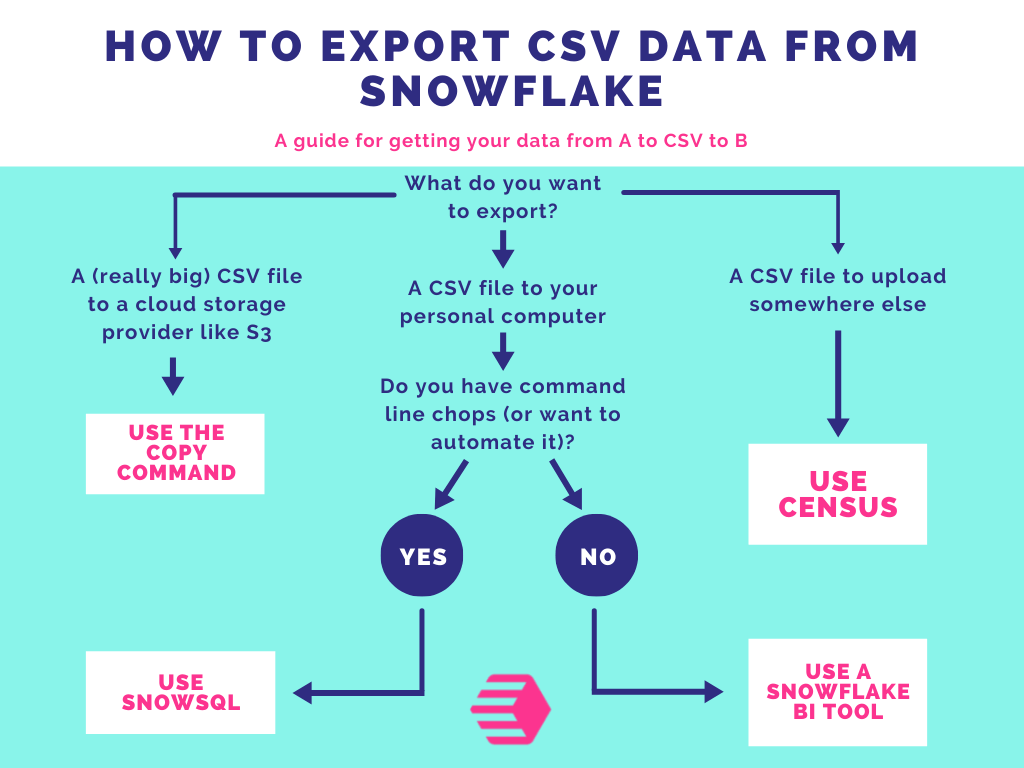

I’m going to spare you from reading this whole post if you already know what outcome you’re looking for. Here’s your “give me my CSV flow” chart:

Quick note: SnowSQL vs. COPY

SnowSQL offers a quick way to export data into your local system (your own computer). However, this feature is also its sticking point if you’re working with a large amount of data as you’ll end up burdening your local option.

In contrast, a COPY command lets you utilize cloud storage (and, by proxy, better performance under large data sizes). Plus, this cloud storage, regardless of AWS, GCP, or Azure, is usually affordable and can be a better option if you need to export large data.

Ok, now that we got those pros and cons out of the way, let’s look at your options. I’ve ordered the list from simplest to most technical, mostly. We kept the “right” (read: smartest) method for the end to avoid any suspicions about favoritism. (Spoiler alert: The right way is to use Census for reverse ETL.)

Anyway, on to the goods.

1. Download a CSV from a BI tool

By far the quickest and easiest way to export CSV files from Snowflake would be to connect Snowflake to a BI tool that allows you to query your Snowflake instance directly and download the query results from that UI. Of course, I’m going to shout out to Mode and Metabase because they both use Census (and because most folks find them really easy to use for SQL analytics). Both options put you just a single click away from exporting a CSV to your desktop.

From Metabase’s web UI, you can find the download button in the bottom right corner of the SQL results lists. From Mode, you can either export CSVs via their API or add a button to a Notebook that allows users to export CSV files.

Here’s the TL;DR:

- Go download Metabase

- Connect Snowflake

- Compose a query

- Click the download button

2. Copy command to save a CSV to cloud storage

If you’re looking for another way to export data, you can always use a COPY command. First, you need an SQL client interface that can connect to your Snowflake. A COPY command becomes powerful when you use it with a cloud platform such as AWS, GCP, or Azure. This command allows you to export a query result into the cloud platform’s storage. For AWS, that’s S3. For CGP, that’s Cloud Storage bucket and for Azure, it’s Azure Container. From there, you can download files into your local storage. If you’re really creative, you can send them on to other destinations from there. (But again, before you do, learn about reverse ETL.)

To get an idea of how a COPY command looks, let’s have a look at a command to export data into S3 in AWS.

copy into s3://mybucket/unload/ from mytable storage_integration = s3_int;The COPY command follows similar rules for GCP and Azure as well.

The copy command consists of an export file path, table name, and connection details. To define your storage integration parameter, refer to the create statement below.

create storage integration s3_int

type = external_stage

storage_provider = s3

storage_aws_role_arn = 'arn:aws:iam::001234567890:role/myrole'

enabled = true

storage_allowed_locations = ('s3://mybucket1/path1/', 's3://mybucket2/path2/');In this case, the parameter names are pretty straightforward and descriptive. The create syntax also follows similar rules for other cloud platforms.

3. SnowSQL CSV export

SnowSQL is a command-line tool that allows you to connect to your Snowflake cluster and run DDL and DML queries. For the uninitiated, DDL stands for Data Definition Language (i.e. queries to manage how data is stored) and DML stands for Data Manipulation Language (i.e. queries to modify the data itself). You can also use it to load and unload data. Depending on your operating system, the installing process may vary.

Of course, you’ll first need to install SnowSQL. When you’re ready to bite into that, check this Snowflake document. For this post, we’re just going to worry about exporting CVSs with SnowSQL.

If you use Linux or macOS, the syntax to export follows as below:

snowsql -c my_example_connection \

-d sales_db \

-s public \

-q 'select * from mytable limit 10' \

-o output_format=csv \

-o header=false \

-o timing=false > output_file.csvLet’s take a look at the parameters you may not know from the code above.

- All SnowSQL commands start with snowsql.

- -c or --connection is the named set of connection parameters

- My_example_connection is the connection name you defined in the config file. You can create a connection alias following these instructions.

- -d is the name of the database

- -s is the schema name

- -q is for query as that would determine the contents of your CSV file

- -o for option

- -o timing=false turns off timestamping

- > output_file.csv is the filename and destination of the CSV file. In this case, it would be in the working directory

If you want to run it in Windows terminal, you only need to change the single quotes to double quotes for the select query.

snowsql -c my_example_connection \

-d sales_db \

-s public \

-q "select * from mytable limit 10" \

-o output_format=csv > output_file.csvUsing SnowSQL, you can quickly export a query result in your operating system without having to open a separate SQL client. If you’re a command line wiz already, you could even pipe that via email right to your boss.

The right way to move data out of Snowflake

If you’ve made it this far, welcome to the new frontier of data. I’d like to introduce to you a tool that’s going to blow your mind (and make your life a lot easier): Census. We’re the originators of the term “reverse ETL” and believe that operational analytics drives action by automatically delivering real-time data to the exact place it’ll be most useful at the exact moment it’s needed most, no matter where that destination is in your organization.

We sync your data between Snowflake (and other popular data warehouses) to destinations such as Salesforce, Hubspot, Zendesk, Facebook Ads, and Braze. You can get started syncing up to 10 tools for free. What are you waiting for? Sign up here.

What you’ve learned: All the ways to export CSV files from Snowflake

In this article, we learned about Snowflake’s file exporting features and probably covered a few new-to-you methods and tools (at least, we hope so). You can choose either BI tools, SnowSQL, COPY commands, or Census to move your data depending on your data size and target destination. At the end, where there’s a will, there’s a way (to persist your data into CSV files.)