In this article, we'll cover:

- How to approach operationalization

- The tools that are making an impact

- 9 Prompts our head of data has developed and deployed

How many “groundbreaking” AI features have been launched, that basically amount to asking AEs and CSMs to interact with a chatbot?

While these copilots have a lot of potential for improving productivity and making tedious or manual tasks easier, chatbots alone aren’t quite living up to the revolutionary promise of AI.

To reach that potential, companies need to move from one-off AI interactions on the individual level to large-scale, operationalized use cases that drive the business forward.

What is operationalized AI?

As we discuss the value of different AI solutions, we’ll use three criteria to determine which are ready to drive meaningful use cases and which aren’t quite ready for prime time.

- Trusted: AI needs to work within the boundaries of its own capability. Operationalizing requires guardrails against poor information or incorrect decisions from having a catastrophic impact, and it demands tooling that is transparent and configurable. Trusted AI tools will allow you to test and iterate at speed to optimize prompts and check outputs.

- Scaled: Operationalized AI needs to be baked into the company’s foundation. It can’t rely on individual employees using a tool or even adopting a feature. Instead, it needs to be enabling and empowering employees in the places they already work.

- Impactful: Operationalized AI can’t just be a party trick. It needs to meaningfully move numbers or unblock projects that wouldn’t have been possible before. For example, many platforms have released AI-driven productivity hacks: pre-generated prompts or thought starters for helping employees write communications or summarize notes. And while these features can be useful on an individual level, truly operationalized AI needs to have a measurable impact that goes beyond saving a few seconds.

Ops teams are uniquely positioned to bring AI into their businesses

For AI to be trusted, scaled and impactful, it needs to be centrally deployed by a team that already has a deep understanding of both governance and getting business teams to adopt new workflows and tools. A company that diffuses the writing and deployment of prompts is also diffusing trust.

This makes the ops leader uniquely positioned to own AI initiatives. Because ops teams touch cross functional processes directly and act as the owners of internal data product, they are in a position to launch large-scale AI initiatives that balance impact and governance.

Managing a few high-impact prompts directly from the warehouse allows ops teams to create a structured testing strategy and centralize feedback across many employees deploying the models. Leaving prompting in the hands of many teams means that models are deployed without structure - leading to repetition of mistakes.

To operationalize AI meaningfully, ops teams need both a strategic vision and the resources to drive good implementation.

Impactful AI strategy depends on good data - but you're more ready than you think

Data has been at the core of the AI conversation since the very beginning. Name a news outlet, analyst or consultancy and the odds are high they’ve published something to the tune of “Get your data AI-ready.” We don’t disagree - quality data is essential for any operationalized AI strategy.

But why are we focusing the conversation on data as a problem to overcome, rather than our most powerful vehicle for change?

Leveraging AI to deliver better data at scale is one of the biggest opportunities to flex the power of LLMs. And it doesn’t require a fundamental transformation to how we do business. Companies already have the infrastructure to impact large-scale initiatives with data and business teams are already trained to integrate data powered personalization and decision-making in to their daily work. In this way, AI used correctly can be both the impetus to change and the solution.

An AI strategy that starts in the data warehouse can have cascading impacts across the business - and when it comes from ops, the transformation required doesn’t have to be disruptive.

The playbook is what it’s always been: use best in class data platforms to activate deeper, more informed, and more meaningful customer interactions across your tech stack.

Good AI outcomes depend on good prompting and rollout

There is a wide gap between prompts that get the job done on a small scale and prompts that are specific yet flexible enough to handle large scale work without careful oversight. The holy grail of AI is a prompt that works in the background, reliably running complex tasks without manual input or correction.

But getting there takes a lot of testing. Writing, running and tweaking prompts is a long process even for those who already deeply understand LLMs. Take our word for it - our data team spent hours iterating just one of the prompts we included in this guide.

But this is why the ops community is such a powerful tool for jumpstarting AI adoption - sharing the prompts that work (and those that don’t) can provide a springboard for other ops leaders looking to experiment with AI.

The AI landscape in ops tooling

While many AI products are still nascent, we see three buckets of AI tooling emerging in the market. When looking to operationalize AI, we want tools that fall into the sweet spot of deeply impactful, bringing a unique value or POV into our tech stack, but at the same time are easy to use and accessible to those without a developer skillset. These tools need to be able to send outputs directly to a place where they can be activated at scale and should involve a low time cost for the user.

The AI tools we're watching

Snowflake Cortex: Allows users to access AI directly from their SQL. Use cases include sentiment analysis, translating text, and data classification. It also provides a query optimization copilot.

Census AI Columns: Census AI Columns allow users to write new columns onto their warehouse without SQL by leveraging a liquid template-driven AI prompting engine. Use cases include

Clay: An AI-powered enrichment tool built for revops teams. It allows users to import a list of leads and automatically fill in questions like key competitors, investors, and SOC II compliance straight into a column within the table.

Databricks AI Functions: A set of SQL functions that allow users to include input from an AI. The capabilities include data classification, fixing grammar, sentiment analysis, or entity masking.

Salesforce Einstein: The AI engine of Salesforce Data Cloud. It’s a copilot that can surface relevant information to reps and provide next best action recommendations for users of marketing cloud.

How can Ops leaders use AI?

So, let’s talk about these operationalized use cases these tools can offer. The sweet spot for using LLMs in a way that is high-trust but high-impact will be in three areas:

- Accessing knowledge: LLMs are incredibly powerful at pulling knowledge from public sources and applying it to your questions. This makes data enrichment a natural use case for AI tooling.

- Removing tedium at scale: LLMs shine at deeply manual or repetitive work - like cleaning and formatting data. We use our own AI Columns tool in-house to prepare our data for syncing.

- Complex analysis - LLMs are capable of ingesting many data points at once to arrive at clear, digestible conclusions. Classifying, summarizing, and applying layers of insight like sentiment analysis are all prime for AI automation.

Let’s dive into specific use cases for each.

The best prompts for Ops Leaders

| Classify and Summarize | Enrich and Enhance | Clean and Format | |

| Lowest Complexity | Summarize Reviews to prioritize areas for improvement | Add Industry data to account records | Enforce an enum format to cleanly classify accounts |

| Medium Complexity | Apply sentiment analysis to outbound responses | Generate personalized outbounds | Translate text |

| Highest Complexity | Analyze product usage trends to provide personalized support | Input detailed account recommendations in Salesforce | Remove special characters or reformat without Regex |

Classify and Summarize Information

Summarize reviews to prioritize areas for improvement

Challenge: Parsing plain text into clear categories for analysis is a time-consuming process. But it’s essential for businesses that need to understand their reviews or free-response feedback at scale. And it’s not just for B2C companies - many B2B companies receive free response feedback from their users, but don’t have the capacity to review it all.

Potential: An LLM can make this process easy by summarizing the text neatly. And a powerful prompt engine can take it even further with enum values that enforce limiting responses to a specified list of potential values.

The Prompt:

Since many of our customers work with global reviews, we like to include a note to ensure that all of the reviews are in English. But if you are operating in a single market, feel free to omit this portion of the prompt.

Apply sentiment analysis to outbound responses

Challenge: Traditional outbound reporting can measure open and response rates, and can measure the rate of booking meetings, but it can’t actually measure the quality of responses that a given email is eliciting.

Potential: With sentiment analysis, an ops team can flag email responses to determine which emails are getting positive responses. They can also flag accounts that are responding with snarky or annoyed messages and add them to lists of contacts that may need a “cooling off” period before continued outreach.

The Prompt:

To give the LLM some structure, we like to classify the responses into four categories. Interested and enthusiastic replies are weighted more heavily when we judge the success of our outbound. We make not of snarky or annoyed responses to avoid over-outbounding an account that isn't interested in our comms.

How it's used:

Analyze product usage trends to provide personalized support

The Challenge: Product adoption is key to keeping churn low and growing your footprint in every account. And personalized coaching is an excellent way to ensure that every customer sees the value of your product. But maintaining a close relationship with each customer isn't usually sustainable. Support and product consulting teams are often left to prioritize the most important accounts, while smaller accounts are stuck with auto-generated feature update and usage summary emails.

The Potential: With LLMs, support and account management teams can provide personalized recommendations at scale. With the ability to draw meaning from in-product actions and marketing interactions, an AI can extrapolate what an account is interested in and where they might need support. They can even auto-generate an email for a support person to send out regularly recommending resources and helpful tips.

The Prompt:

First, we instruct the LLM on how to parse the data it has been given. We use a JSON formatted cell to hold the account's activity data. Then, we instruct the LLM to provide insights in a specific format.

The LLM then outputs its insights, which we sync directly to our reps.

.png?width=2400&height=1818&name=Gitbook%20code%20snippet%20template%20(12).png)

How It's Used: We're using a JSON populated with information about the company's most recent actions to feed this prompt. We can then route this into an automated email, or into Salesforce to surface it to reps before they chat with their contacts.

Enrich and Enhance data with AI

Add industry data to account records

The challenge: Having clean industry data is often a prerequisite for meaningful solutions selling and marketing. But this data is also notoriously complex to get. Even companies using enrichment partners like Zoominfo often end up with overly broad industry categories, or mis-categorizations that make the data nearly unusable. Fixing the issue is often a months-long endeavor.

The potential: With GTM teams able to quickly assign SIC codes or industry descriptions at the right level of specificity, teams can quickly analyze their best areas for product market fit, prioritize investment opportunities, or assign appropriate labels for personalized outreach.

The Prompt:

We like to request a classification by SIC code, which is an SEC designation. This encourages the model to provide more structured responses that are easier to group.

The LLM can easily be instructed to provide more granular or general recommendations to right-size the specificity to your use case.

This can return something good enough to get us started, but what if we want something more specific? We can easily layer on prompts to achieve the right level of specificity. For example, classifying a company explicitly as a B2B SaaS company, or specifying within a "manufacturing" designation what the company makes and creating groupings out of these. The prompts can easily be iterated upon to arrive at the required level of insight to power reporting or segmented marketing.

How it's used:

Generate personalized emails based on account activity

Challenge: Personalization is the name of the game in outbounding. But a simple form-fill driven email in the format “hi, [firstname], I saw [company] is interested in [service]!” is easy to spot. Many reps are using AI to perform basic account research to drive their outreach, but this is still a deeply manual process.

Potential: With the generative capabilities of LLMs, we can do personalized sales outbounding at scale. By working out of our data repository, we can easily take in behavioral data or context from account records and route email context directly to automation platforms like Apollo. The results go much deeper than a simple dynamic field - the entire email can be personalized to the contact’s persona, use case and recent activities.

The Prompt:

A basic iteration of this prompt asks the LLM to reference our case study page and generate a personalized outbound email, with parameters for what makes a good and bad email:

To increase the complexity, we like to pair this with a JSON column that combines our event stream with information about our web pages, like the one shown in our product activity prompt.

How it's used:

We route these emails into Salesforce to prompt our reps with next best action recommendations.

Input detailed account recommendations in Salesforce

Challenge: Keeping track of many lower-touch accounts or managing handoffs can be a risky process for customer facing teams. Delivering a high bar for personalized service is paramount to preventing churn, but doesn’t easily scale.

Potential: With an LLM constantly summarizing insights, teams can keep a pulse on what content an account is reading, how they’re using the product, and what their priorities might be - without the lift of manual research. Doing this at scale and piping results directly into Salesforce can bake this bar of excellence into a team’s ways of working, without any manual changes on the part of sales reps.

The Prompt:

First, we explain the structure of our reference data to the LLM so that it understands how to parse the JSON. Then, we instruct the LLM to structure the insights we want our reps to have in Salesforce:

How it's used:

We like to pair prompts like this with a python script we created to crawl our webpages and assign likely intents and summaries. We then join this with our event stream to get a list of an account's activity and what it likely means.

Because the context windows on new LLMs are so large, the model can easily parse these cells into summarized insights.

.png?width=2400&height=1818&name=Gitbook%20code%20snippet%20template%20(14).png)

Clean and Format Data

Enforce an enum format to cleanly classify accounts

The Challenge: Poorly formatted data is the quiet killer of productivity. While it seems like a small issue, getting data into a format that can be easily pivoted out or analyzed can be a major source of delays.

The Potential: With LLMs, we can remove the tedium from manually fixing up data that has no easy fix. We can make discrete categories, clean close matches into exact matches, and simplify the process of analyzing data fed by manual entry with potential for mistakes.

The prompt:

.png?width=2400&height=1818&name=Gitbook%20code%20snippet%20template%20(8).png)

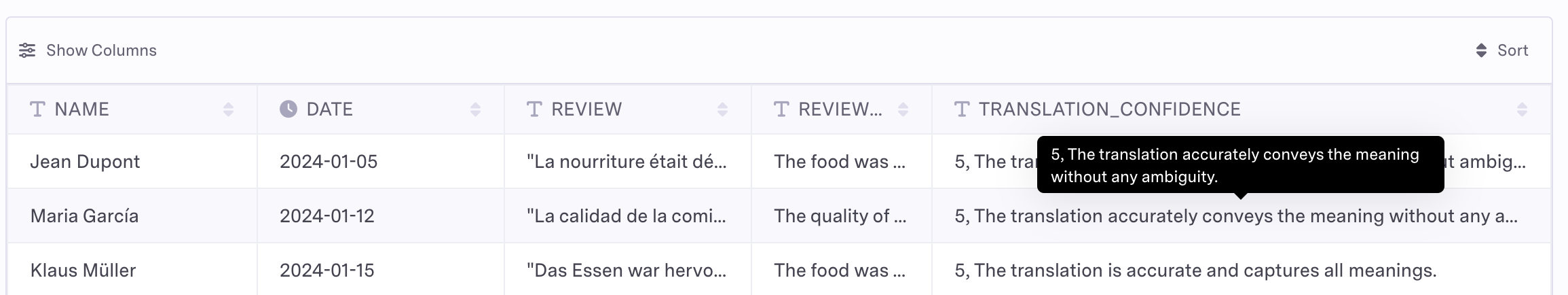

Translate text

The Challenge: For companies that do business internationally or in countries where multiple languages are spoken, analyzing free response reviews and inputs can be a messy process. Consider, for instance, an American tech company expanding into EMEA. Often one of the best growth markets for these companies is the BENELUX region, belgium, netherlands and luxembourg. But each of these countries has multiple commonly spoken languages. To consider their reviews or feedback by region or country, these companies will need to either hire a data analyst who speaks many languages, or find a way to normalize this data into a common language.

The Potential: With an LLM capable of reliably translating data in the warehouse, companies can normalize their source of truth into their language of business. And as a bonus, they can layer in an ask to further categorize this data into easily analyzed buckets.

The Prompt:

But simple internet-based translation has been possible for decades. The complexity of translation comes from checking the quality. To improve the outputs we receive from this simple prompt, we use a secondary column to get the LLM to output a confidence score. With this, we can filter our final analysis to ensure we are only considering translations for which the model is highly confident.

How It's Used:

We like to use this in combination with our classification prompts to take a list of global reviews in multiple languages and turn them into structured data. We enforce an enum response format to ensure we are getting clean data .

Remove special characters or reformat without Regex

The Challenge: Data cleanup can be time consuming and highly manual. It can eat away at resources you could be using to do more visible, impactful work. But it remains a key limitation to syncing and activating data.

The Potential: LLMs are made for repetitive, structured tasks like data cleanup. With a clear set of parameters, an AI can clean records at scale to increase activation speed.

The Prompt:

This type of prompt has many implementations. Here we've used it to remove leading and trailing spaces that may be difficult to spot:

Taking the first step

Operationalizing AI can be a massive, highly visible win for ops teams. But getting started comes with a high testing and implementation barrier. But with the right approach, teams can get started without massive tech and process transformations. A few of of our learnings from implementing AI models into our GTM ops:

- Get your data ready for AI by testing AI: Simple tasks like data cleanup are easy to prompt and low-stakes to implement. And more complex tasks, like indexing your webpages by intent can bring a lot of richness to your data to draw upon without risking any poor customer outcomes.

- Scale thoughtfully by deploying to reference tools first: Our first forays into deploying AI routed into account descriptions and notes. This gave our reps a chance to manually review the output for errors while still receiving some value. Through this feedback process, we ensured not only that the prompt wasn't returning bad data, but that the data provided was the most important and impactful information.

- Test, iterate, and iterate again: All of our best prompts have been multi-round tests. Great prompting relies on experimentation. For this reason, we can't overstate the value of tools that allow you to iteratively create prompts and review outputs.

Questions? Feel free to reach out to our team of ace ops leaders. Happy prompting!

.png?width=2400&height=1818&name=Gitbook%20code%20snippet%20template%20(5).png)

.png?width=2400&height=1414&name=Gitbook%20code%20snippet%20template%20(2).png)

.png?width=2400&height=1818&name=Gitbook%20code%20snippet%20template%20(6).png)

.png?width=2400&height=1818&name=Gitbook%20code%20snippet%20template%20(13).png)

.png?width=2400&height=1414&name=Gitbook%20code%20snippet%20template%20(1).png)

.png?width=2400&height=1818&name=Gitbook%20code%20snippet%20template%20(7).png)

.png?width=2376&height=1046&name=Gitbook%20code%20snippet%20template%20(9).png)

.png?width=2400&height=1818&name=Gitbook%20code%20snippet%20template%20(4).png)

.png?width=2358&height=728&name=Gitbook%20code%20snippet%20template%20(3).png)