In this article we’ll cover:

- Some basic facts about LLMs and what they mean for prompt structuring

- Tips for writing a stronger prompt

- Examples for writing a useful sales outreach-focused prompt

- A guide for how ops leaders can turn one-off prompts into automated workflows

Using AI tools to do time-consuming, manual work is a great time-saving strategy. But the quality of your output depends greatly on the quality of your prompt. And LLMs have some quirks that might not be immediately obvious:

Every day, we read tips for ways tech employees can use LLMs. To write our outbounds, perform account research, find trends in our data. But unless we're writing excellent prompts, we run the risk of getting responses that are subpar or outright wrong.

So how do we control our output without creating too much work for ourselves?

A Brief Introduction to How LLMs Think

- LLMs break things down into tokens: The definition of a “token” can vary by model, but generally they represent parts of words - about 4 characters. This is the reason for the viral "chat gpt doesn't know how many "r's" are in strawberry" discussion. Because the LLM doesn't actually process whole words but as letter groupings.

- Many LLMs bill by tokens processed (input and output)

- The token is the base unit of the model’s understanding, and these are processed as numeric values rather than words.

- LLMs consider parts of your prompt in order - and have a limited context window: LLMs process prompts token by token, meaning that the order of words matters for how the prompt is understood. Each model has a limited number of tokens it can “remember” at once. These windows vary by model, but for example GPT-3 has a window of 4,096 tokens, while GPT-4 has a window of 32,768 tokens.

- Information at the end of a prompt is generally “fresher” in the LLM’s memory.

- They have attention mechanisms that help them connect and prioritize concepts: LLMs weigh the importance of different parts of the prompt in their responses to give the best possible answer.

- Additions like numbers and bullets help to separate distinct concepts

- Reiterating the most important parts of your prompt may help to reinforce what is important

Writing Strong Prompts for AI tools

Knowing these factors, there are a few tips our team uses to write better AI prompts.

- An LLM is an intern, not a peer: These models are incredibly good at answering direct requests, but they also rely entirely on you for understanding what is important. Being as specific as possible in your prompt will help the model deliver its output. Some questions to consider:

- How long of a response are you looking for? Three bullets? 250 characters? An essay?

- Where is the LLM meant to start its research? Is there context it should consider? Are there sites that have better information than others?

- Are there any constraints it should follow or anything it should NOT do, such as respecting certain privacies or avoiding plagiarism?

- Define what good looks like:

- Lay out the steps sequentially: This will help the LLM understand what your priorities are and what concepts are distinct and discrete.

- Number the steps to make the sequence clear

- Reiterate the most important step at the bottom

- Format response: Many LLMs will add confirmations and pleasantries to their responses, or attempt to explain how they arrived at a conclusion.

- Determine whether or not you want explanation: Sometimes, the LLM will provide a lengthy explanation when all you needed is a number. This doesn't feel like a big deal until you remember - many LLMs bill by the number of tokens ingested and returned.

- Consider requesting a confidence score: You can request this as a "low/med/high" indicator, or a percentage score. But this will help you determine whether the LLM is grasping at straws to fulfill your request.

- Remind the model to consider the full prompt at the end

Let’s Prompt: Writing a better sales email

One of the common recommended use cases for LLMs is helping AEs outbound more efficiently. Performing account research and writing outbound emails can be much faster with the support of an AI. Let’s walk through this case:

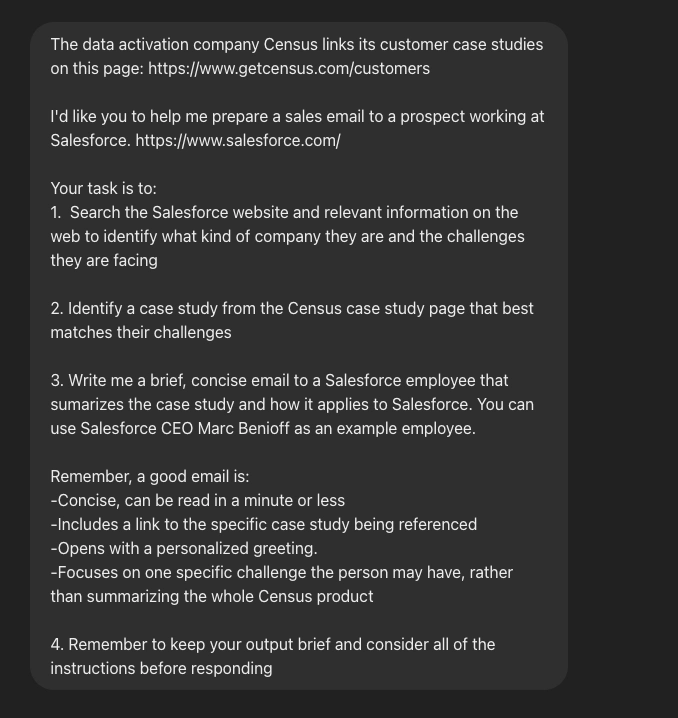

Many prompts begin like this: a simple, straightforward request.

But the results come out like this:

This is certainly text we didn’t have to write, but we probably won’t get much response from this. The email is long, unfocused and generic. So let’s try again and apply some of our tips.

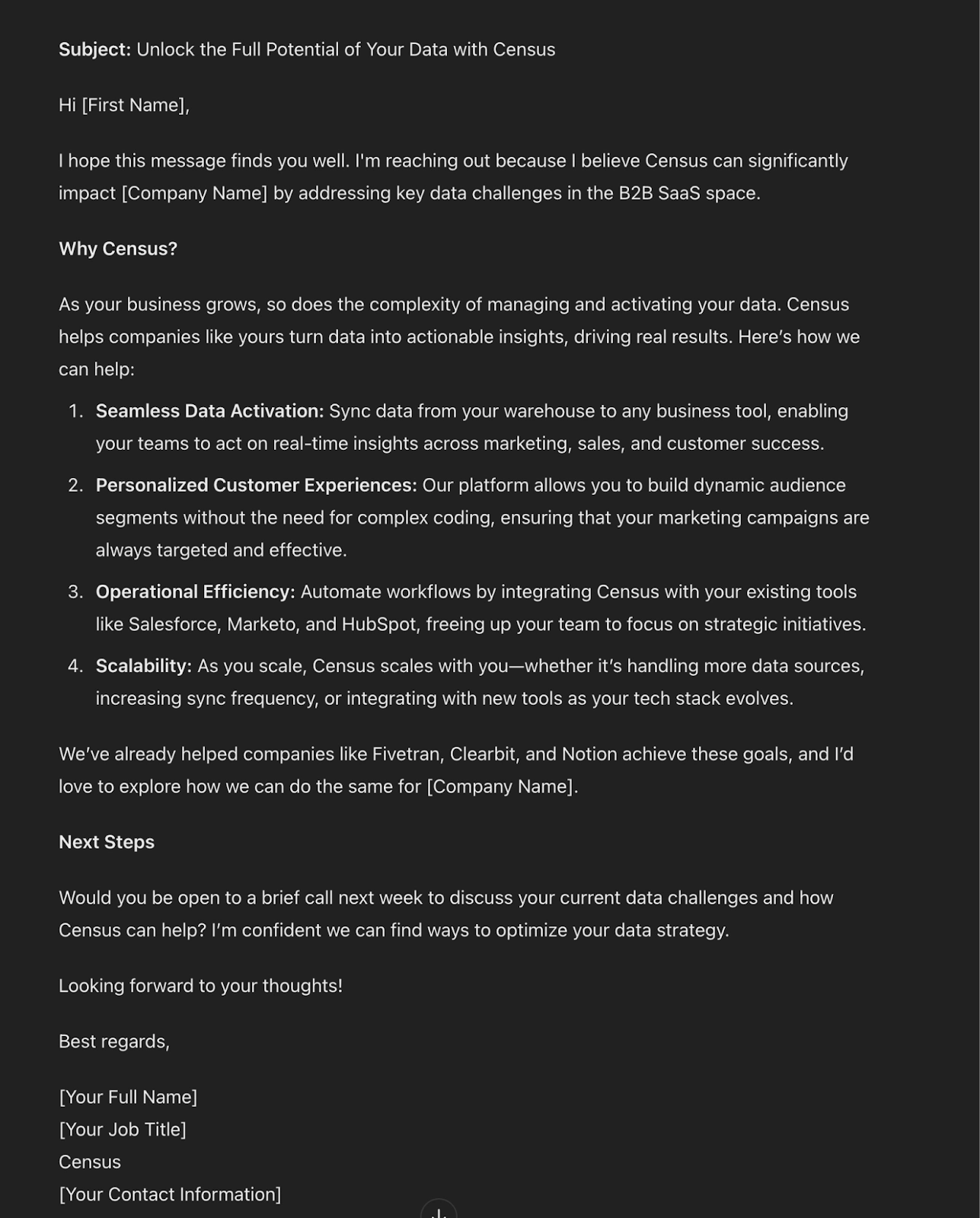

Savvier prompters might know to include a description of what good looks like. With a bit more information, we can prompt the LLM to output something a bit shorter and more specific.

This is looking fine. But fine is for parking tickets, not top performers. This email could make an effective one-off, but to run a sequence, we probably want something a bit less generic that really digs into a specific part of our data activation solution.

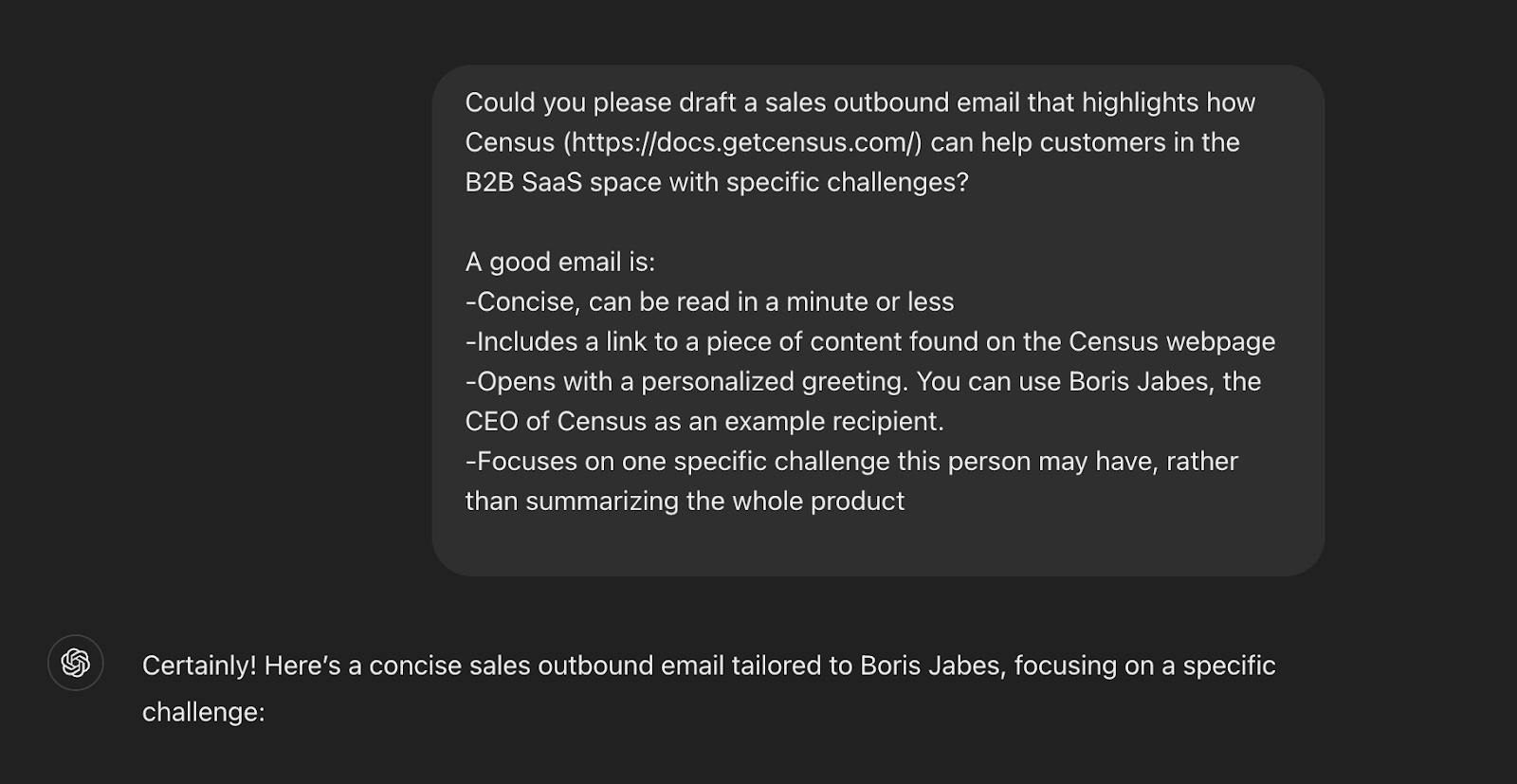

So let’s try one more time. This time, let’s really flex what the LLM can do with a more complex prompt. We’ll number the tasks so it doesn’t get confused, still including a strong definition of success. Because this is a long prompt with several steps, we’ll also remind it to be concise and consider all of the instructions before proceeding.

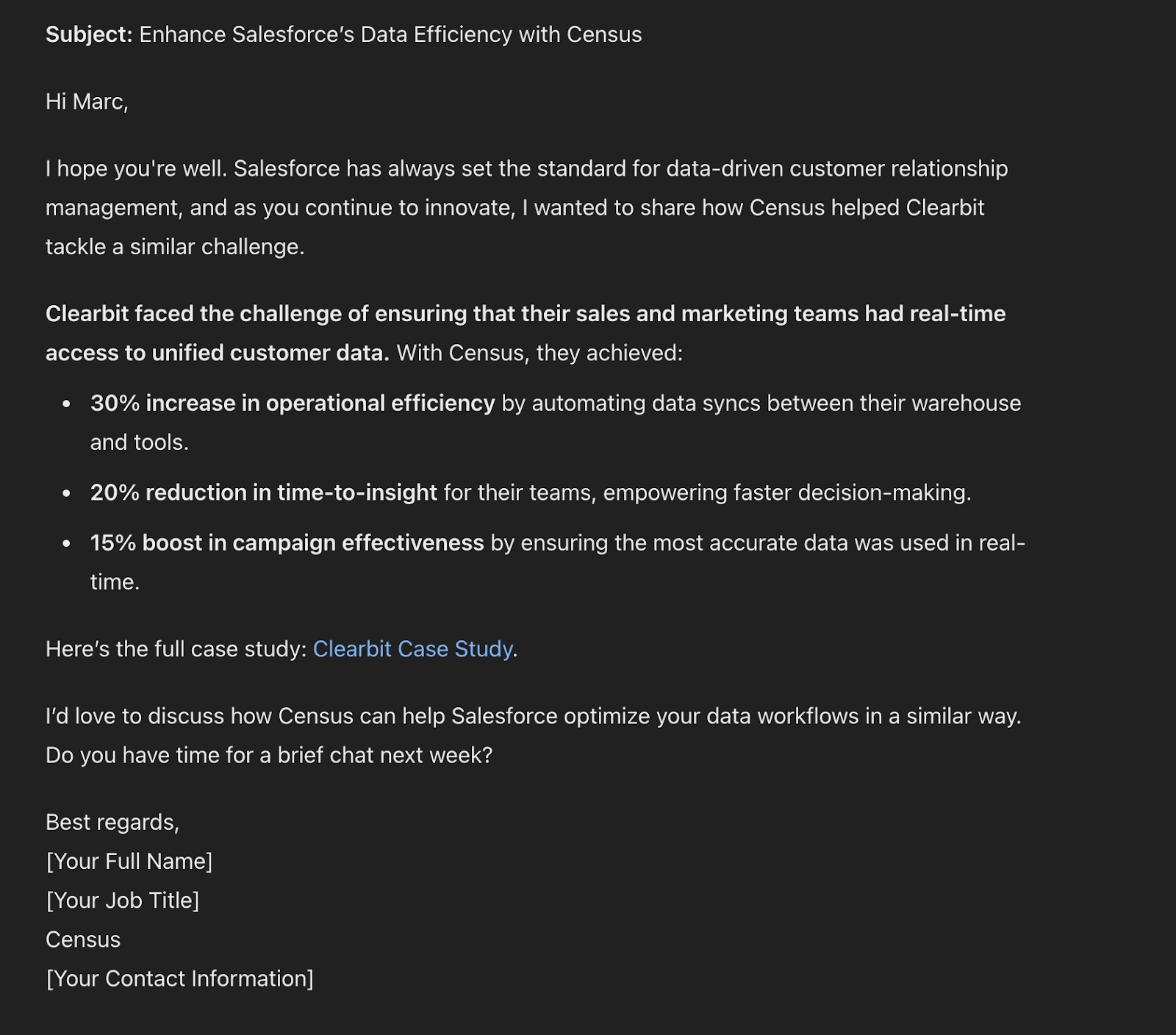

With a bit more careful prompting, we get an outbound email that looks like this:

Now THAT’S an email. I may want to tweak this slightly before sending it out under my own name, but it looks pretty good to me.

Level Two: LLM prompts that scale

Not having to write emails by hand is pretty sweet, but let’s be honest–a lot of AEs have the support of their ops teams to automate semi-personalized email sequences on their behalf, or at least write up some starter content.

So how do we make this a bit more than a cool toy?

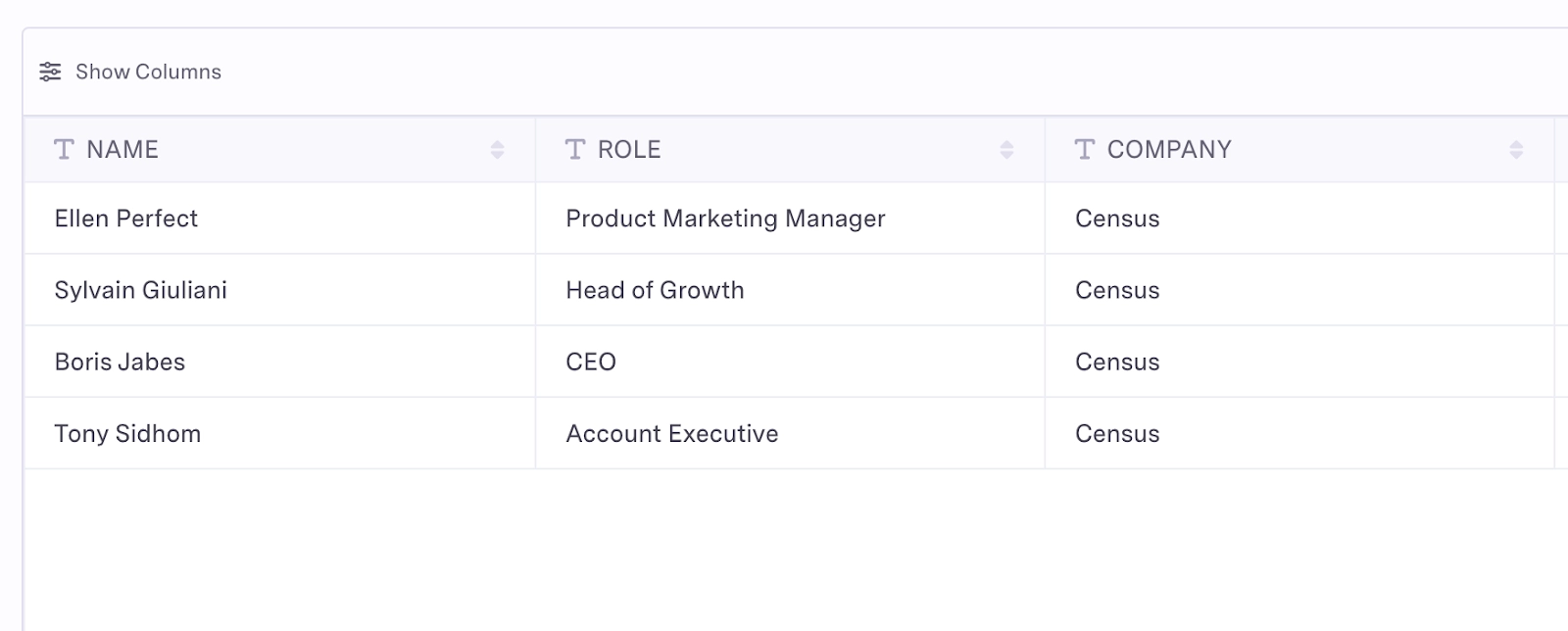

With an LLM that’s connected to our data warehouse, we can start doing a whole lot more. Liquid template prompts allow us to insert variable fields that the prompt can interpret to change its output. So if I have a list of contacts I’d like to prospect:

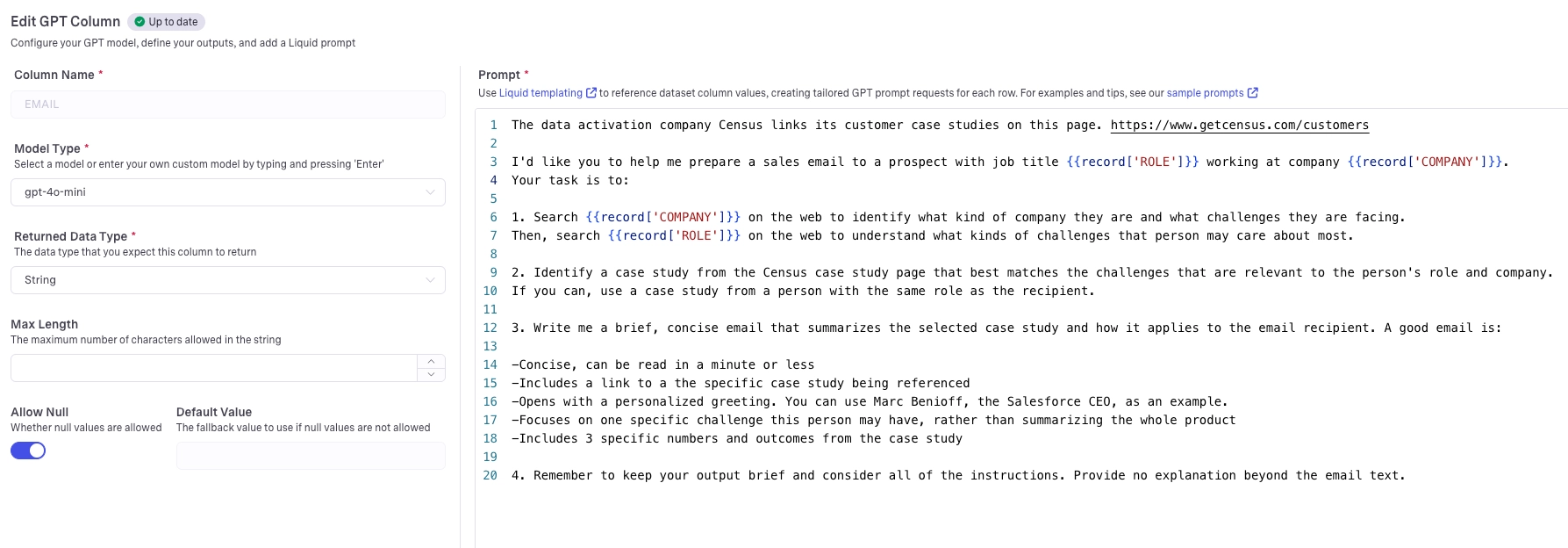

I can use a tool like Census’s GPT columns workspace to build a prompt that looks like this:

The full text of the prompt:

The data activation company Census links its customer case studies on this page. https://www.getcensus.com/customers

I'd like you to help me prepare a sales email to a prospect with job title working at company . Your task is to:

1. Search on the web to identify what kind of company they are and what challenges they are facing. Then, search on the web to understand what kinds of challenges that person may care about most.

2. Identify a case study from the Census case study page that best matches the challenges that are relevant to the person's role and company. If you can, use a case study from a person with the same role as the recipient.

3. Write me a brief, concise email that summarizes the selected case study and how it applies to the email recipient. A good email is:

-Concise, can be read in a minute or less

- Includes a link to a the specific case study being referenced

- Opens with a personalized greeting. You can use Marc Benioff, the Salesforce CEO, as an example.

- Focuses on one specific challenge this person may have, rather than summarizing the whole product

- Includes 3 specific numbers and outcomes from the case study

- Remember to keep your output brief and consider all of the instructions. Provide no explanation beyond the email text.

And the model will give me outputs that look like this:

Subject: Unlocking Product Insights at Census

Hi Ellen,

I hope this message finds you well! As a Product Marketing Manager at Census, you’re likely focused on enhancing product insights and ensuring that your marketing strategies align perfectly with customer needs.

I wanted to share a relevant case study from Census' collaboration with Segment, which showcases impressive outcomes that could resonate with you. By implementing Census, Segment was able to achieve:

- A 30% increase in customer engagement through better-targeted campaigns.

- A 40% reduction in time spent on data integration tasks, allowing their team to focus more on strategy.

- And a significant growth in user retention by 25% leveraging accurate customer data insights.

I believe these results underline the potential impact of seamless data activation for your product marketing initiatives. You can check out the full case study here: Segment Case Study

Best,

[Your Name] [Your Position] [Your Contact Information]

But even better, it outputs for me personalized emails for each name on the list, each featuring a different case study.

With a sync engine like Census, I can write these emails back into my warehouse and sync them to Apollo or any other activation tool. And now I’m sending any number of personalized emails, all for the price of one quick prompt.

Want more tips on ways to operationalize your LLMs? Check out our list of helpful prompt recipes here.